- Docker Container With Docker Installed Ubuntu

- Docker Container With Docker Installed Centos

- Docker Image With Docker Installed

- Docker Container With Docker Installed Windows

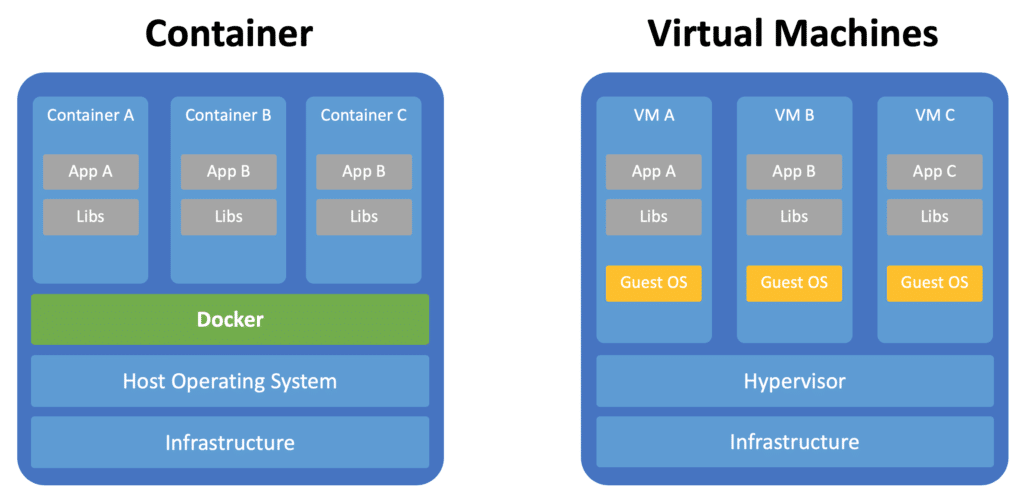

In case of Docker Container, since the container runs on your host OS, you can save precious boot-up time. This is a clear advantage over Virtual Machine. Consider a situation where I want to install two different versions of Ruby on my system.

Docker uses containers tocreate virtual environments that isolate a TensorFlow installation from the restof the system. TensorFlow programs are run within this virtual environment thatcan share resources with its host machine (access directories, use the GPU,connect to the Internet, etc.). TheTensorFlow Docker images are tested for each release.

Docker is the easiest way to enable TensorFlow GPU support on Linux since only theNVIDIA® GPU driver is required on the host machine (the NVIDIA® CUDA® Toolkit does not need tobe installed).

TensorFlow Docker requirements

- Now you can even run graphical applications (if they are installed in the container) using X11 forwarding to the SSH client: ssh -X username@IPADDRESS xeyes ## run an X11 demo app in the client Here are some related resources: openssh-server doesn't start in Docker container; How to get bash or ssh into a running container in background mode?

- Docker Compose relies on Docker Engine for any meaningful work, so make sure you have Docker Engine installed either locally or remote, depending on your setup. On desktop systems like Docker Desktop for Mac and Windows, Docker Compose is included as part of those desktop installs.

- Install Docker onyour local host machine.

- For GPU support on Linux, install NVIDIA Docker support.

- Take note of your Docker version with

docker -v. Versions earlier than 19.03 require nvidia-docker2 and the--runtime=nvidiaflag. On versions including and after 19.03, you will use thenvidia-container-toolkitpackage and the--gpus allflag. Both options are documented on the page linked above.

- Take note of your Docker version with

docker command without sudo, create the docker group andadd your user. For details, see thepost-installation steps for Linux.Docker Container With Docker Installed Ubuntu

Download a TensorFlow Docker image

The official TensorFlow Docker images are located in the tensorflow/tensorflow Docker Hub repository. Image releases are tagged using the following format:

| Tag | Description |

|---|---|

latest | The latest release of TensorFlow CPU binary image. Default. |

nightly | Nightly builds of the TensorFlow image. (Unstable.) |

version | Specify the version of the TensorFlow binary image, for example: 2.1.0 |

devel | Nightly builds of a TensorFlow master development environment. Includes TensorFlow source code. |

custom-op | Special experimental image for developing TF custom ops. More info here. |

Each base tag has variants that add or change functionality:

| Tag Variants | Description |

|---|---|

tag-gpu | The specified tag release with GPU support. (See below) |

tag-jupyter | The specified tag release with Jupyter (includes TensorFlow tutorial notebooks) |

You can use multiple variants at once. For example, the following downloadsTensorFlow release images to your machine:

Start a TensorFlow Docker container

To start a TensorFlow-configured container, use the following command form:

For details, see the docker run reference.

Examples using CPU-only images

Let's verify the TensorFlow installation using the latest tagged image. Dockerdownloads a new TensorFlow image the first time it is run:

Docker Container With Docker Installed Centos

Success: TensorFlow is now installed. Read the tutorials to get started.Let's demonstrate some more TensorFlow Docker recipes. Start a bash shellsession within a TensorFlow-configured container:

Within the container, you can start a python session and import TensorFlow.

To run a TensorFlow program developed on the host machine within a container,mount the host directory and change the container's working directory(-v hostDir:containerDir -w workDir):

Permission issues can arise when files created within a container are exposed tothe host. It's usually best to edit files on the host system.

Start a Jupyter Notebook server usingTensorFlow's nightly build:

Follow the instructions and open the URL in your host web browser:http://127.0.0.1:8888/?token=...

Docker Image With Docker Installed

GPU support

Docker is the easiest way to run TensorFlow on a GPU since the host machineonly requires the NVIDIA® driver (the NVIDIA® CUDA® Toolkit is not required).

Install the Nvidia Container Toolkit to add NVIDIA® GPU support to Docker. nvidia-container-runtime is onlyavailable for Linux. See the nvidia-container-runtimeplatform support FAQ for details.

Check if a GPU is available:

Verify your nvidia-docker installation:

nvidia-docker v2 uses --runtime=nvidia instead of --gpus all. nvidia-docker v1 uses the nvidia-docker alias, rather than the --runtime=nvidia or --gpus all command line flags.Examples using GPU-enabled images

Download and run a GPU-enabled TensorFlow image (may take a few minutes):

It can take a while to set up the GPU-enabled image. If repeatedly runningGPU-based scripts, you can use docker exec to reuse a container.

Use the latest TensorFlow GPU image to start a bash shell session in the container:

Docker Container With Docker Installed Windows

TensorFlow is now installed. Read the tutorials to getstarted.